Real-Time Generative AI Applications: Harnessing the Power of Stream Processing

- Gang Tao

- Mar 26, 2024

- 6 min read

Generative AI is dramatically reshaping the software industry by positioning Large Language Models (LLMs) as the new power engine driving applications. In this blog, I’ll discuss how to leverage stream processing to avoid the limitations of common LLMs architectures.

LLMs exhibit remarkable power, but have widely documented limitations:

Data Freshness LLM are usually trained with a large amount of existing historical public data, so it lacks knowledge of what happened recently or what is happening now. It also has no knowledge of specific private data.

Short Memory LLMs usually have limited context, meaning they cannot remember all useful states. Even though the amount of context is becoming bigger and bigger with new LLMs, short memory is always a limitation. (See reference graph below)

Hallucination Hallucination refers to a phenomenon where LLMs generate responses that contain factual inaccuracies, inconsistencies, or contextually inappropriate information. This issue arises due to the model's tendency to generate text based on patterns learned from the training data, sometimes without proper regard for coherence or accuracy.

Reference: Short memory is always a limitation of LLMs.

To solve these challenges, one effective and popular solution is called Retrieval-Augmented Generation (RAG).

RAG is the process of optimizing the output of a LLM, so it references an authoritative knowledge base outside of its training data sources before generating a response.

Let's see a real example of how RAG works.

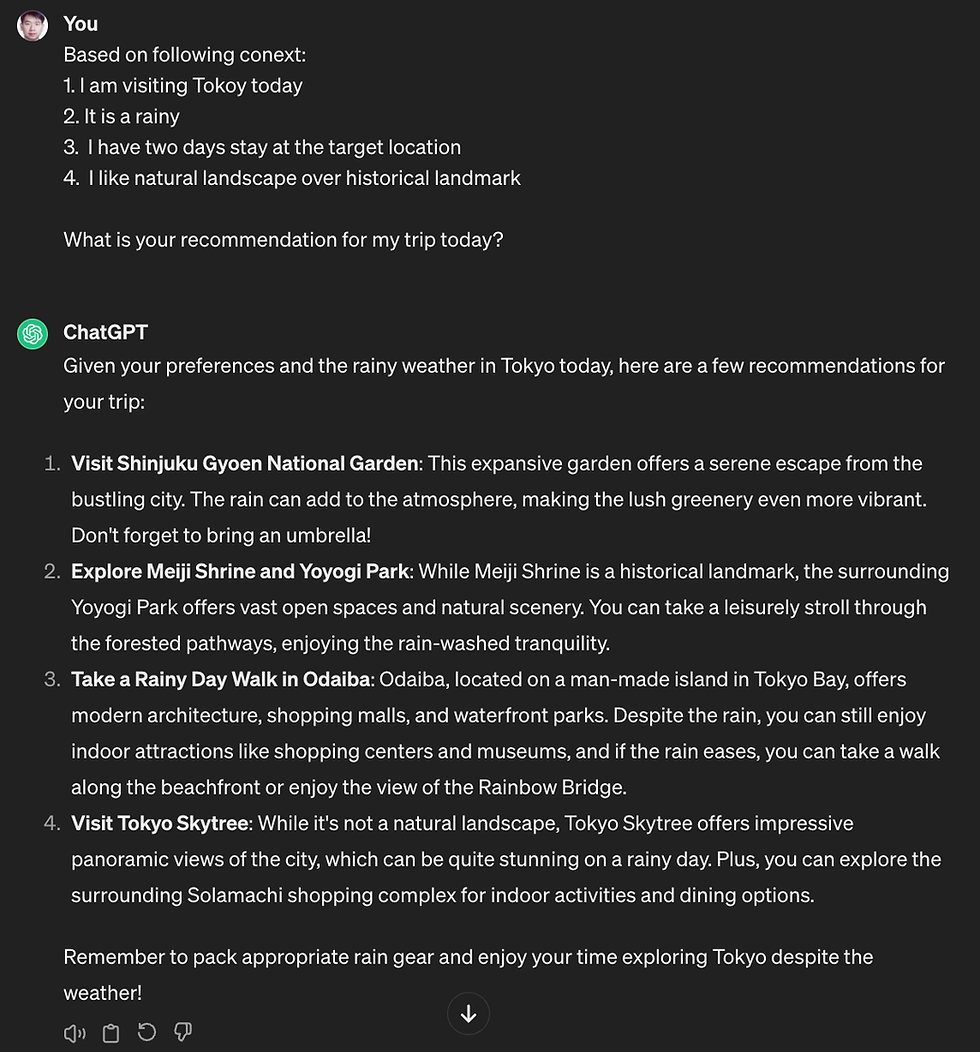

I am asking ChatGPT to provide some personalized recommendations for my upcoming trip, but due to lack of context, ChatGPT cannot make any recommendations.

By providing contextual information from external knowledge in the prompt, now ChatGPT can make a detailed recommendation for my trip. Here's the context:

Private data: where is the trip and how long it is

Real-time data: what is the weather now

Historical data: based on the user’s past behavior, what is the user’s preference

So, when building these trip recommendation systems, the main problem to solve is how to access data from different systems and construct context.

By providing related context from external data sources, RAG solved the problem of data freshness by accessing real-time weather information, and private data access issues by accessing the user's trip plan.

RAG is usually working together with a vector storage which acts as the long-term storage for an LLM. In the above case, the user’s preference can be stored as a vector in the vector database, leveraging similarity search provided by vector store. What the user likes or dislikes can be easily retrieved.

Building LLM applications is fundamentally a data engineering problem. How to build an effective RAG-based data processing system is the key to a successful LLM application.

A thoughtful LLM application architecture will have the following components:

A real-time data processing pipeline used to process all sorts of data

A vector data storage used for historical embedding data index

A context builder

A LLM model

There are lots of articles talking about why a vector database is required for LLM, but why do you need a real-time data pipeline in this case?

As I mentioned, the key to make LLM works is providing high quality, up to date, well-structured data as the context. This is exactly what a real-time data processing pipe can do:

Access various data sources and join these data sources into one unified data view

Process data as soon as new data generated to keep the data up to date

Filter, transform, enrich the data to make it more usable and understandable

Continuously ingest data into the historical vector store, making sure the vector storage contains all up-to-date information when it is required by the LLM context.

LLM Application Architecture

Real-time data streaming

Apache Kafka is your best choice to transport all your real-time data, not only because Kafka is such a mature player in such an area, but also because of the rich community and ecosystem support.

Kai Waehner (Global Field CTO of Confluent) shares this great illustration of the ecosystem, and you can refer to his blog for other choices.

Real-time stream processors

The most popular streaming processing tool is Apache Flink, a highly scalable and reliable solution. While managing and operating such data pipeline infrastructure with Flink is not easy. Usually you need a dedicated engineering team to manage Flink due to the complexity of Flink.

There are new stream processing tools that are designed to eliminate the complexity of stream processing, such as Timeplus Proton, Bytewax, and RisingWave. I will focus on how we solve for this at Timeplus, with our open-source engine, Timeplus Proton.

Timeplus Proton is a powerful streaming SQL engine designed for tasks like streaming ETL, real-time anomaly detection, real-time feature processing for machine learning. It's built for high performance using C++ and optimized with SIMD. Proton is lightweight (<500MB), standalone (no JVM or dependencies required), and can run on various platforms including Docker or low-resource instances like AWS t2.nano. It extends the functionalities of ClickHouse with stream processing capabilities.

Timeplus Proton could be an excellent choice for Retrieval-Augmented Generation (RAG) in language models due to its efficient streaming capabilities and performance. With its ability to handle high-volume data streams and provide low-latency processing, Proton can support real-time retrieval of relevant information to augment language generation tasks. Its integration with streaming data platforms like Kafka enables seamless retrieval of up-to-date information, enhancing the context and relevance of generated text.

Additionally, Proton's support for thousands of SQL functions and high-speed querying makes it suitable for efficiently retrieving and processing vast amounts of data needed for RAG tasks. Proton is designed to be easy to use with Kafka, which makes it your great choice to build such a real-time data pipeline for your LLM applications.

Vector Database

There are many vector data databases available in today’s market, choose one of them as your LLMs long term memory.

Refer to this blog by RisingWave founder, Yingjun Wu, for more information about choosing a Vector database.

In the realm of database choices for vector storage and search, opting for a dedicated vector database might not always be the optimal route. Why? Well, consider this: you might already have robust data analysis systems in place, leveraging databases like ClickHouse or PostgreSQL. In such scenarios, it's highly beneficial to use these existing components to meet your vector storage and search needs, particularly those introduced by LLM implementations.

If you're already utilizing ClickHouse for your data analysis requirements, I strongly advocate for extending its utility as your vector database. Leveraging ClickHouse in this capacity not only streamlines your infrastructure but also ensures seamless integration with your existing workflows. For further insights into this approach, dive into the following blogs from ClickHouse for a deeper understanding: Searching Vectors in ClickHouse, Building a RAG Pipeline for Google Analytics, and Building a Chatbot for Hacker News and Stack Overflow.

Embedding

An embedding refers to a mathematical representation of text in a numerical vector space. Some vector databases provide embedding functions such as Chroma DB, but in most cases you need to choose your own embedding functions.

In case you want to make things simple, you can just use the embedding API provided by OpenAI. Or you can run those embedding models by yourself, there are lots of different embedding models available on huggingface, for example this SFR-Embedding-Mistral model developed by Salesforce.

In case your task is a very specific domain, you may also want to train your own embedding model.

Context Builder

You may just write some python code to build your prompt in case your task is simple and you are familiar with those prompt engineer tricks. Or you can leverage those tools to help you fulfill these prompt generation, such as LangChain or LIamaIndex.

LLM

Finally, you need a LLM model, and actually other than the ChatGPT provided by OpenAI, there are lots of open source or closed source models you can choose. Which one to use is totally dependent on your own use case and requirements.

Summary

LLMs revolutionize our data interactions, and real-time data is the best source to make LLMs work as expected. Enterprises and organizations are putting more and more data into streaming platforms such as Apache Kafka, which can work as the foundation for real-time data architecture of LLM applications. Application developers, equipped with a top-tier tool like Timeplus Proton, can extract invaluable information from streaming data, and these values will be magnified by the LLMs in your GAI applications.

In today’s blog, I've demonstrated why you need a real-time streaming data pipeline for your LLM applications and why Apache Kafka and Proton are great tools for this job. We also listed all the key components that are required to build a GAI application. I hope this blog can help you to design your next LLM application.

Want to know more about Timeplus Proton? Visit the Timeplus Proton GitHub Repo.